Relative Frequency: The Experimental Probability of an Event Happening

From Theory to Experiment: Defining Two Types of Probability

Probability is the study of uncertainty. It gives us a numerical measure of how likely something is to occur. This measure can be found in two main ways: theoretically and experimentally.

Theoretical Probability is based on pure reasoning about a situation. It assumes that all outcomes are equally likely. We calculate it by dividing the number of favorable outcomes by the total number of possible outcomes. For example, the theoretical probability of rolling a 4 on a fair six-sided die is:

$P(\text{Event}) = \frac{\text{Number of Favorable Outcomes}}{\text{Total Number of Possible Outcomes}}$

So, $P(\text{rolling a 4}) = \frac{1}{6}$. This is a prediction based on logic, not on actually rolling the die.

Experimental Probability (Relative Frequency), on the other hand, is determined by actually performing an experiment or by collecting data from past observations. It is the ratio of the number of times an event occurs to the total number of trials performed. This probability can change as you gather more data, and it often gets closer to the theoretical probability as the number of trials increases—a principle known as the Law of Large Numbers.

The Heart of the Matter: The Relative Frequency Formula

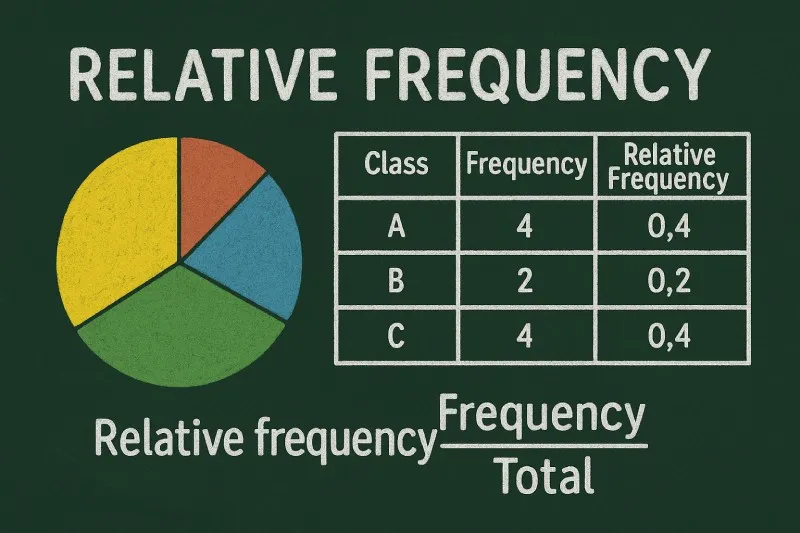

The calculation for experimental probability is straightforward but powerful. It turns raw data into a useful probability estimate.

$\text{Relative Frequency} = \frac{\text{Number of Times the Event Occurs}}{\text{Total Number of Trials}}$

This formula produces a number between 0 and 1. It can also be expressed as a percentage by multiplying the result by 100%.

Imagine you flip a coin 50 times and it lands on heads 23 times. The experimental probability (relative frequency) of getting heads is:

$\frac{23}{50} = 0.46$ or 46%.

The theoretical probability is 50%. With more flips, say 1000, the relative frequency will likely be much closer to 50%.

A Step-by-Step Walkthrough: Conducting Your Own Experiment

Let's solidify our understanding with a detailed example. Suppose a game involves spinning a color wheel divided into 8 equal sections: 3 red, 4 blue, and 1 green.

Step 1: Define the Event. Our event is "the spinner lands on blue."

Step 2: Perform Trials. We spin the spinner 20 times and record the results. Our data shows: Red: 7 times, Blue: 10 times, Green: 3 times.

Step 3: Apply the Formula.

Number of times blue occurs = 10.

Total number of trials = 20.

$\text{Relative Frequency of Blue} = \frac{10}{20} = 0.5$.

Step 4: Interpret. Based on our experiment, the experimental probability of landing on blue is 0.5 or 50%.

Step 5: Compare with Theory. The theoretical probability is calculated from the wheel's design: $\frac{4 \text{ blue sections}}{8 \text{ total sections}} = 0.5$. In this experiment, our result matched the theory perfectly, but that won't always be the case, especially with a small number of trials.

| Total Spins (Trials) | Times Blue Occurred | Relative Frequency of Blue | As a Percentage |

|---|---|---|---|

| 10 | 4 | $\frac{4}{10} = 0.4$ | 40% |

| 20 | 10 | $\frac{10}{20} = 0.5$ | 50% |

| 50 | 23 | $\frac{23}{50} = 0.46$ | 46% |

| 100 | 49 | $\frac{49}{100} = 0.49$ | 49% |

| 1000 | 502 | $\frac{502}{1000} = 0.502$ | 50.2% |

The table beautifully illustrates the Law of Large Numbers. With only 10 trials, the relative frequency (0.4) was quite far from the theoretical 0.5. As the number of trials increased to 1000, the experimental result (0.502) converged very closely to the expected value.

Real-World Applications: Where Relative Frequency Comes to Life

Experimental probability is not just a classroom concept. It is a tool used daily in various fields to make informed predictions and decisions based on empirical evidence.

1. Quality Control in Manufacturing: A factory produces light bulbs. To estimate the defect rate, a quality inspector tests a sample of 500 bulbs and finds 12 that are faulty. The experimental probability (relative frequency) of a bulb being defective is $\frac{12}{500} = 0.024$ or 2.4%. The company can use this figure to predict future production issues and improve their process.

2. Sports Analytics: A basketball player has attempted 850 free throws in their career and made 697 of them. Their free-throw percentage, which is a relative frequency, is $\frac{697}{850} \approx 0.82$ or 82%. This statistic is used to evaluate the player's skill and predict their likelihood of making the next shot.

3. Medical Testing: A new medical test is administered to 10,000 patients. It correctly identifies 950 out of 1,000 sick patients (true positives) and 8,820 out of 9,000 healthy patients (true negatives). The experimental probability of the test being accurate is calculated separately for sensitivity ($\frac{950}{1000} = 95\%$) and specificity ($\frac{8820}{9000} = 98\%$). These relative frequencies are crucial for doctors interpreting test results.

4. Weather Forecasting: Meteorologists use historical data—a form of massive experimental trial—to predict weather. If, over the past 50 years, it has rained on 18 out of 50 March 15ths, the experimental probability of rain on that date is 36%. This informs the "chance of rain" in forecasts.

Important Questions

Question 1: Can the experimental probability ever be exactly the same as the theoretical probability?

Yes, it can, but it is not guaranteed, especially with a low number of trials. It is a matter of chance. As the number of trials increases, the experimental probability tends to get very close to the theoretical probability due to the Law of Large Numbers, but it may not be a perfect match.

Question 2: Why is experimental probability important if we already have theoretical probability?

Theoretical probability models an ideal, simplified world. Experimental probability reflects reality, where conditions are never perfect. It is essential when:

- The theoretical probability is too complex or impossible to calculate (e.g., probability of a real coin being slightly biased).

- We need to validate a theory or model using real data.

- We are dealing with unique, one-time events where historical data is the only guide (e.g., predicting election results).

Question 3: How many trials are needed for a "good" experimental probability estimate?

There is no magic number. It depends on the situation and the desired accuracy. More trials generally lead to a more reliable estimate. In our spinner table, 20 trials gave a good estimate, but 1000 gave a much better one. Scientists and statisticians use specific formulas to determine sample sizes needed for reliable results in studies and polls.

Relative frequency, or experimental probability, bridges the gap between mathematical theory and the messy, unpredictable real world. It empowers us to move from asking "What should happen?" to discovering "What did happen?" and, consequently, "What is likely to happen next?" From the simple coin toss to complex climate models, this concept is a cornerstone of data-driven thinking. By understanding how to collect data, apply the simple formula, and interpret the results, we gain a practical tool for making sense of uncertainty. Remember, while a single experiment might give a surprising result, the persistent trend revealed through many trials—the relentless work of the Law of Large Numbers—brings our experimental findings into harmonious alignment with the elegant predictions of theory.

Footnote

[1] Law of Large Numbers: A fundamental theorem in probability and statistics. It states that as the number of trials or observations increases, the experimental probability (relative frequency) of an event will get closer and closer to its theoretical probability.

[2] Empirical: Based on, concerned with, or verifiable by observation or experience rather than theory or pure logic. Experimental probability is an empirical measure.

[3] Sample: A subset of individuals or observations taken from a larger population, used to make inferences about the population. In experiments, the set of trials conducted is a sample of all possible trials.