Memory Hierarchy: The Pyramid of Speed and Cost

1. Why Do We Need a Hierarchy? The Speed & Cost Dilemma

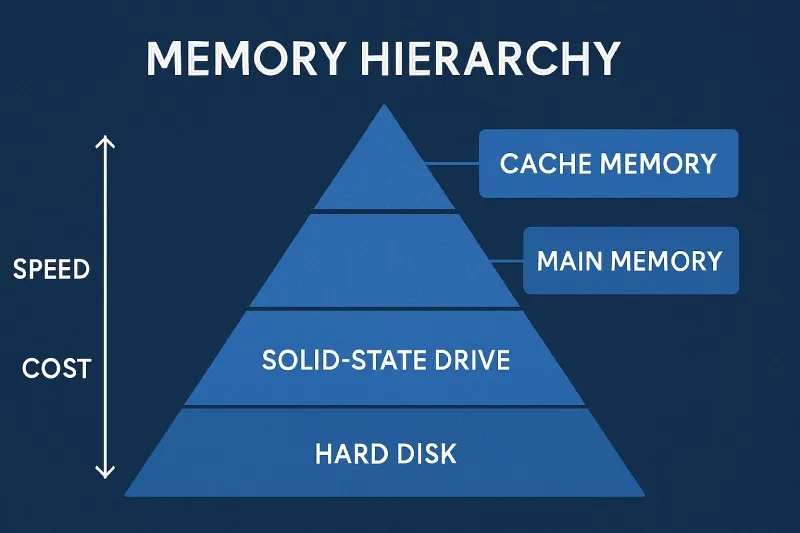

Imagine buying a computer. If we used the fastest possible memory for everything, the price would be sky-high. On the other hand, if we only used cheap, slow storage, the computer would take minutes just to open a program. The memory hierarchy solves this problem by using a mix of different memory types arranged like a pyramid.

At the top of the pyramid, we have a tiny amount of extremely fast and expensive memory (registers and cache). As we go down the pyramid, memory gets slower, cheaper per byte, and much larger in capacity (RAM, then SSDs, then HDDs). The computer's brain (the CPU) works with the top levels most of the time, but it can also call upon the vast storage at the bottom when needed.

2. The Levels of the Hierarchy: A Closer Look

Let's explore each level of the pyramid, from the smallest and fastest to the largest and slowest. The key characteristics that differentiate them are speed (access time), size (capacity), and cost per gigabyte.

| Level | Typical Size | Speed (Access Time) | Cost per GB | Managed By |

|---|---|---|---|---|

| Registers | Bytes (e.g., 32 x 8 bytes) | ~ 0.3 ns (1 cycle) | Highest | CPU Compiler |

| Cache (L1, L2, L3) | KB to MB (e.g., 32 MB) | ~ 1-30 ns | Very High | Hardware |

| RAM (Main Memory) | GB (e.g., 16 GB) | ~ 80-250 ns | Moderate (~$3/GB) | OS |

| Secondary Storage (SSD) | Hundreds of GB to TB | ~ 0.1-1 ms | Low (~$0.15/GB) | OS / User |

| Secondary Storage (HDD) | TB (e.g., 4 TB) | ~ 5-20 ms | Lowest (~$0.02/GB) | OS / User |

3. The Principle of Locality: Making the Hierarchy Work

The memory hierarchy isn't just a list of parts; it's a system that relies on a clever observation about how programs run: the Principle of Locality. Programs tend to reuse data and instructions they have used recently or those stored nearby. This comes in two flavors:

- Temporal Locality (Time): If you access a piece of data, you'll likely access it again soon. Think of a loop counter in a program; it is used many times. The hierarchy keeps this data in the fast cache.

- Spatial Locality (Space): If you access a piece of data, you'll likely access nearby data soon. When reading a file, the next bytes are probably needed next. The hierarchy loads a whole block of nearby data into cache.

Without locality, the hierarchy would fail because the CPU would constantly have to wait for data from slow main memory. This waiting is measured by a formula that defines the average time to access memory:

Where $HitRate$ is the probability data is in cache, $MissRate = 1 - HitRate$, $T_{cache}$ is cache speed, and $T_{memory}$ is main memory speed.

4. Real-World Example: Playing a Video Game

Let's follow how data moves through the hierarchy when you play a game like "Minecraft" on your computer.

- Secondary Storage (SSD/HDD): The game's code, textures, and world files are stored here. When you launch the game, the CPU tells the OS to load the game from the slow SSD into RAM.

- Main Memory (RAM): The game is now in RAM. This is where active programs live. As you play, the game's executable code and the textures for the world around you are held here.

- Cache (L1, L2, L3): As the CPU needs to run specific instructions (like calculating physics for a block), it first looks in the ultra-fast cache. The hardware automatically predicts what instructions you'll need next (spatial locality) and loads them from RAM into cache.

- Registers: Finally, the CPU moves the exact data needed for an immediate calculation (like $x + 5$) from the cache into its own internal registers. The arithmetic is performed on the data inside the registers in a single clock cycle.

Without the hierarchy, if the CPU had to wait for the SSD every time it did a tiny calculation, the game would be unplayable—running at perhaps one frame per minute instead of 60 per second.

5. Important Questions About Memory Hierarchy

The main reason is cost and physical limits. Registers are built into the CPU itself using the fastest, most power-hungry, and most expensive technology (like SRAM cells for cache). They also must be physically tiny to be close to the processing cores. Building gigabytes of this type of memory would make a single computer cost millions of dollars and generate immense heat.

When a cache miss occurs, the CPU's progress stalls for a moment. It sends a request to the next level of memory (say, L2 cache, then L3, then RAM). The requested data is fetched from the lower level (which is slower) and brought up into the cache. The CPU then continues. Modern CPUs try to hide this delay by doing other work while waiting.

Not directly. The memory hierarchy refers to storage directly connected to a single computer's CPU. Cloud storage is another computer's secondary storage (a hard drive in a data center) accessed over a network. Network speeds are millions of times slower than even a local hard drive, so it's considered a different category of "archival" or "external" storage, far below the hierarchy we've discussed.

The memory hierarchy is a brilliant engineering compromise. It uses a small amount of expensive, fast memory (registers and cache) to feed the CPU at top speed, a moderate amount of moderately-priced memory (RAM) for active programs, and a large amount of cheap, slow memory (SSDs/HDDs) to store everything permanently. This layered approach gives us the illusion of a machine that is both fast, large, and affordable.

📌 Footnote

[1] CPU (Central Processing Unit): The "brain" of the computer that executes instructions.

[2] Cache: A small, very fast memory located close to the CPU core that stores copies of frequently used data from main memory.

[3] RAM (Random Access Memory): The main working memory of a computer; it is volatile, meaning it loses data when power is off.

[4] SSD (Solid State Drive) & HDD (Hard Disk Drive): Types of non-volatile secondary storage used for long-term data retention. SSDs are faster and more expensive than HDDs.

[5] ns (nanosecond) & ms (millisecond): Units of time. $1 ms = 1,000,000 ns$. A nanosecond is one-billionth of a second.