Huffman Coding: The Clever Shortcut for Sending Messages

From Morse Code to Binary Trees

Imagine you are sending a secret message to a friend. In standard encoding, like ASCII, every letter uses the same number of bits[4]. The letter 'e' (very common) and the letter 'z' (quite rare) would both take up 8 bits. This is not efficient! Huffman Coding solves this by taking inspiration from an older system: Morse Code.

In Morse Code, the common letter 'E' is a single dot (.), while the less common 'Q' is --.- (longer). David A. Huffman, a graduate student in 1951, formalized this idea into an optimal algorithm for computers using binary trees and priority queues.

Building a Huffman Tree: A Step-by-Step Example

Let's encode the word "HELLO". First, we count the frequency of each character.

| Character | Frequency (Count) |

|---|---|

| H | 1 |

| E | 1 |

| L | 2 |

| O | 1 |

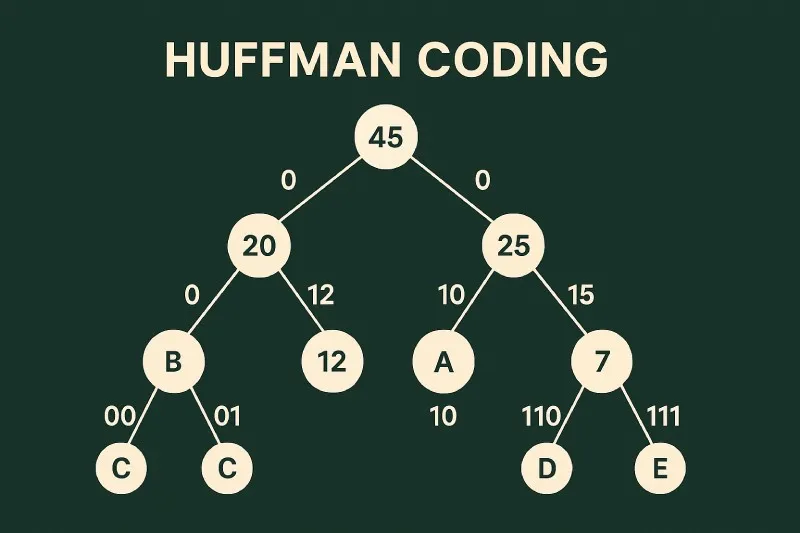

Now, follow these steps to build the tree:

Step 1: Create a leaf node for each character with its frequency. Put all nodes in a priority queue (sorted by lowest frequency first).

Step 2: While there is more than one node in the queue:

a. Remove the two nodes with the smallest frequency. Let's call them $node_{left}$ and $node_{right}$.

b. Create a new internal node with a frequency equal to the sum of the two nodes' frequencies. $f_{new} = f_{left} + f_{right}$.

c. Make $node_{left}$ the left child and $node_{right}$ the right child of the new node.

d. Add this new node back into the priority queue.

Step 3: The remaining node is the root of the Huffman Tree.

Let's visualize this process for "HELLO":

Tree Construction Walkthrough:

1. Start: Nodes: H(1), E(1), L(2), O(1).

2. Combine H(1) and E(1) -> Node HE(2). Queue: L(2), O(1), HE(2).

3. Combine O(1) and L(2) -> Node OL(3). Queue: HE(2), OL(3).

4. Combine HE(2) and OL(3) -> Root Node HEOL(5). Queue: HEOL(5).

Now, to assign codes, label every left branch with 0 and every right branch with 1. The code for a character is the path from the root to its leaf.

| Character | Possible Code (Path) | Length |

|---|---|---|

| L (most frequent) | 11 | 2 bits |

| H | 00 | 2 bits |

| E | 01 | 2 bits |

| O | 10 | 2 bits |

The word "HELLO" in standard 8-bit ASCII would be 5 * 8 = 40 bits. In our Huffman code, it becomes: H(00) E(01) L(11) L(11) O(10) = 0001111110, which is only 10 bits! This is a 75% reduction. In real scenarios with larger texts and varied frequencies, the savings are significant.

Why It Works: The Prefix Property and Decoding

A crucial feature of Huffman codes is that no code is a prefix of another. This is called the prefix property. Look at our codes: 00, 01, 10, 11. No code starts with another code. Why is this important?

It makes decoding instant and unambiguous. If you receive the bitstream 0001111110, you start at the root of the tree and follow the bits:

Bit 0 (go left), Bit 0 (go left) -> you immediately reach leaf 'H'. Output H. Go back to root.

Next bit 0 (left), Bit 1 (right) -> leaf 'E'. Output E.

Next bit 1 (right), Bit 1 (right) -> leaf 'L'. Output L. And so on.

Without the prefix property, you would need special markers or pauses, like in Morse Code, which would waste space. Huffman Coding builds this property directly into the tree.

Huffman Coding in the Real World

You use Huffman Coding every day, often without knowing it. It is a critical component in many widespread file formats because it squeezes out redundancy without any loss of quality.

1. File Compression (ZIP, GZIP, 7z): These tools often use Huffman Coding as one step in a multi-stage process. They first find repeated patterns, then use Huffman to efficiently encode those patterns.

2. Image Compression (PNG, JPEG): The PNG image format uses Huffman Coding in its final stage (after filtering and other techniques) to compress the pixel data losslessly. JPEG uses a similar method called Huffman coding in its lossy compression process to encode the transformed image data.

3. Digital Communication: Protocols for sending data over networks (like DEFLATE, used in HTTP) employ Huffman to reduce the amount of data that needs to be transmitted, making websites load faster.

A simple analogy is packing a suitcase. Instead of putting each item in a same-sized box (fixed-length coding), you use custom-fitted pouches (variable-length coding). Socks (common) get a tiny pouch, while a winter coat (rare) gets a large one. The suitcase holds much more this way.

Important Questions

No. Huffman is optimal for a fixed set of symbols and frequencies where each code must be an integer number of bits. However, other methods like Arithmetic Coding can be slightly more efficient by allowing fractional bits per symbol, especially for very skewed probabilities. Huffman remains extremely popular due to its simplicity and speed.

The main disadvantage is that the compression model (the tree or code table) must be stored or transmitted along with the compressed data. For very short messages, the overhead of storing the tree can outweigh the compression benefits. Also, it is a "static" model for the entire data set; adaptive methods exist that build the tree as they read the data.

Absolutely! Huffman Coding works on any data that can be broken down into symbols with varying frequencies. It is used to compress the results of other compression stages, like in images (pixel difference values) or audio (frequency coefficients). The "symbols" can be numbers, commands, or any discrete token.

Footnote

[1] Huffman Coding: An algorithm for lossless data compression developed by David A. Huffman.

[2] Binary Code: A coding scheme using only two possible values, typically 0 and 1, which are the fundamental units (bits) in computing.

[3] Lossless Compression: A data compression method that allows the original data to be perfectly reconstructed from the compressed data.

[4] Bit: The most basic unit of information in computing, a binary digit (either a 0 or a 1).

[5] ASCII (American Standard Code for Information Interchange): A character encoding standard that represents text in computers, using 7 or 8 bits per character.