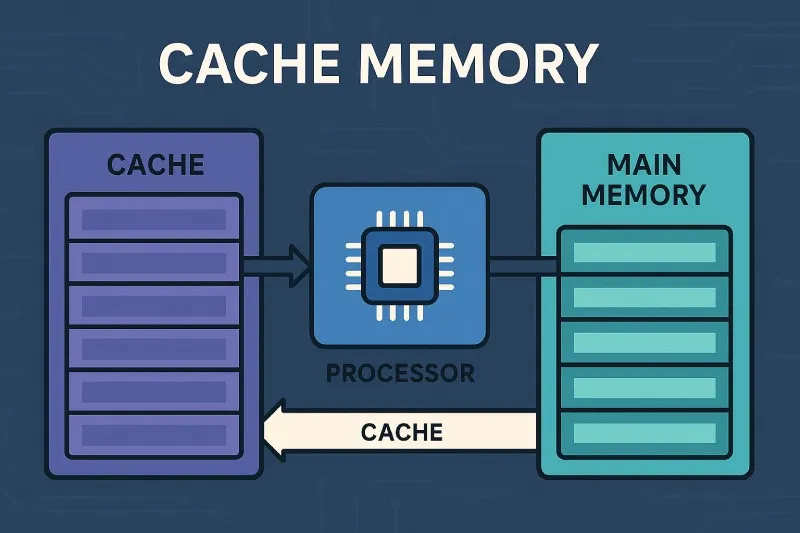

Cache Memory: A small, fast memory located close to the CPU used to store frequently accessed data and instructions

🏗️ 1. Memory tiers: From the palace to the warehouse

Imagine you are solving a math problem. You have a small notebook on your desk (cache), a bookshelf in your room (RAM), and a library in another building (SSD/HDD). You keep the formula you are using right now in the notebook. That is cache memory. It is tiny, but it is right under your nose. Without it, you would walk to the library for every number — your computer would feel frozen.

The Central Processing Unit (CPU) works at an incredible speed. It can perform billions of operations per second. However, the main memory (DRAM) is much slower — 50-100 times slower. If the CPU had to wait for the RAM every single time, it would waste most of its life. Cache memory bridges this gap. It is made of Static RAM (SRAM), which is faster and more expensive than the Dynamic RAM (DRAM) used for main memory. That is why cache capacity is small, usually measured in kilobytes or a few megabytes.

| Memory type | Typical size | Speed (access time) | Cost per GB |

|---|---|---|---|

| L1 Cache (SRAM) | 32-128 KB | ~0.5-1 ns | Highest |

| L2 Cache (SRAM) | 256 KB - 1 MB | ~2-5 ns | High |

| L3 Cache (SRAM) | 2 - 32 MB | ~10-20 ns | Medium |

| RAM (DRAM) | 4 - 32 GB | ~50-100 ns | Low |

| SSD (Flash) | 128 GB - 2 TB | ~100,000 ns (0.1 ms) | Very low |

📦 2. The three squads: L1, L2 and L3 cache

Modern processors do not use a single cache; they use three levels, like a relay race. L1 cache is the smallest but fastest. It sits right inside the CPU core. It is often split into L1i (instructions) and L1d (data). L2 cache is slightly larger, still very fast, and sometimes dedicated per core. L3 cache is shared among all cores, larger, and a bit slower. When the CPU needs data, it checks L1, then L2, then L3, and finally RAM. If it finds the data in cache — a cache hit — the process is fast. If not — a cache miss — it must go to main memory, which costs a lot of time.

Think of a chef preparing three dishes. His countertop (L1) holds salt and pepper. The kitchen rack (L2) holds spices he uses often. The pantry (L3) has ingredients for the whole menu. The warehouse (RAM) stores everything else. Without the countertop and rack, the chef would run to the pantry hundreds of times per meal.

📐 Cache performance formula: The average memory access time (AMAT) is:

If a cache has $T_{hit}=1\ ns$, miss rate $5\%$ and miss penalty $50\ ns$, the average access is $1 + 0.05 \times 50 = 3.5\ ns$ — still much faster than $50\ ns$.

🎮 3. Real-world mission: Gaming and video streaming

Imagine you are playing a racing game on a console. The track, the opponent cars, and the physics rules are stored on the SSD or Blu-ray disc. As you play, the game loads the current track into RAM. But the CPU must calculate the position of every car 60 times per second. Those calculations use the same steering instructions repeatedly. The temporal locality of the loop makes L1 and L2 cache incredibly efficient. The car’s coordinates are stored in a small array; when the CPU reads coordinate [i], it also pulls [i+1] into cache — spatial locality. Without this, each frame would be delayed, causing stuttering.

Another example is video playback. When you watch a 4K movie, the decoder reads a block of compressed data, decompresses it, and sends it to the screen. The decompression algorithm uses the same math table (e.g., inverse cosine transform) millions of times. This table stays in L2/L3 cache. Without cache, the processor would fetch the table from RAM each time, increasing power consumption and possibly causing dropped frames.

❓ 4. Important questions about cache memory

A: SRAM needs 6 transistors per bit; DRAM needs only 1 transistor + capacitor. If 16 GB of RAM were built with SRAM, it would cost thousands of dollars and fill an entire room. Cache uses expensive SRAM only for the most critical data.

A: Yes — it stalls. Modern CPUs try to hide this by out-of-order execution (doing other work while waiting), but eventually a miss causes a "bubble" in the pipeline. That is why cache hit ratio ($\frac{\text{hits}}{\text{total accesses}}$) is crucial. A 97% hit ratio sounds good, but if the penalty is huge, performance still suffers.

A: Absolutely. ARM-based processors like Apple’s M-series or Snapdragon have L1, L2, and L3 caches. Their L3 cache is often called "system level cache" (SLC) and is shared between CPU, GPU, and other components. It saves battery because accessing DRAM consumes much more power than hitting cache.

🎯 Conclusion: The invisible turbo